Insurers, regulators race to harness power of AI

The business of insurance is powered by data. Always has been.

Data sharpens underwriting decisions, produces the most accurate risk assessments and helps to better connect insurance producers with potential buyers.

In short, data drives insurance. And that makes insurance a natural fit for an artificial intelligence revolution.

“It reminds me of the early days of the internet when it was exploding and everybody knew that it was going to change everything, but we weren’t sure exactly how it was going to change things,” said Mitch Dunford, chief marketing officer with the Risk and Insurance Education Alliance, during a recent webinar. “Here we are again. We know AI is going to be a bigger and bigger deal, and the insurance industry is embracing it.”

Of course, embracing the quick-change world of AI technology is not an easy move for many traditional insurance companies. It is not an industry known for its adventurous nature.

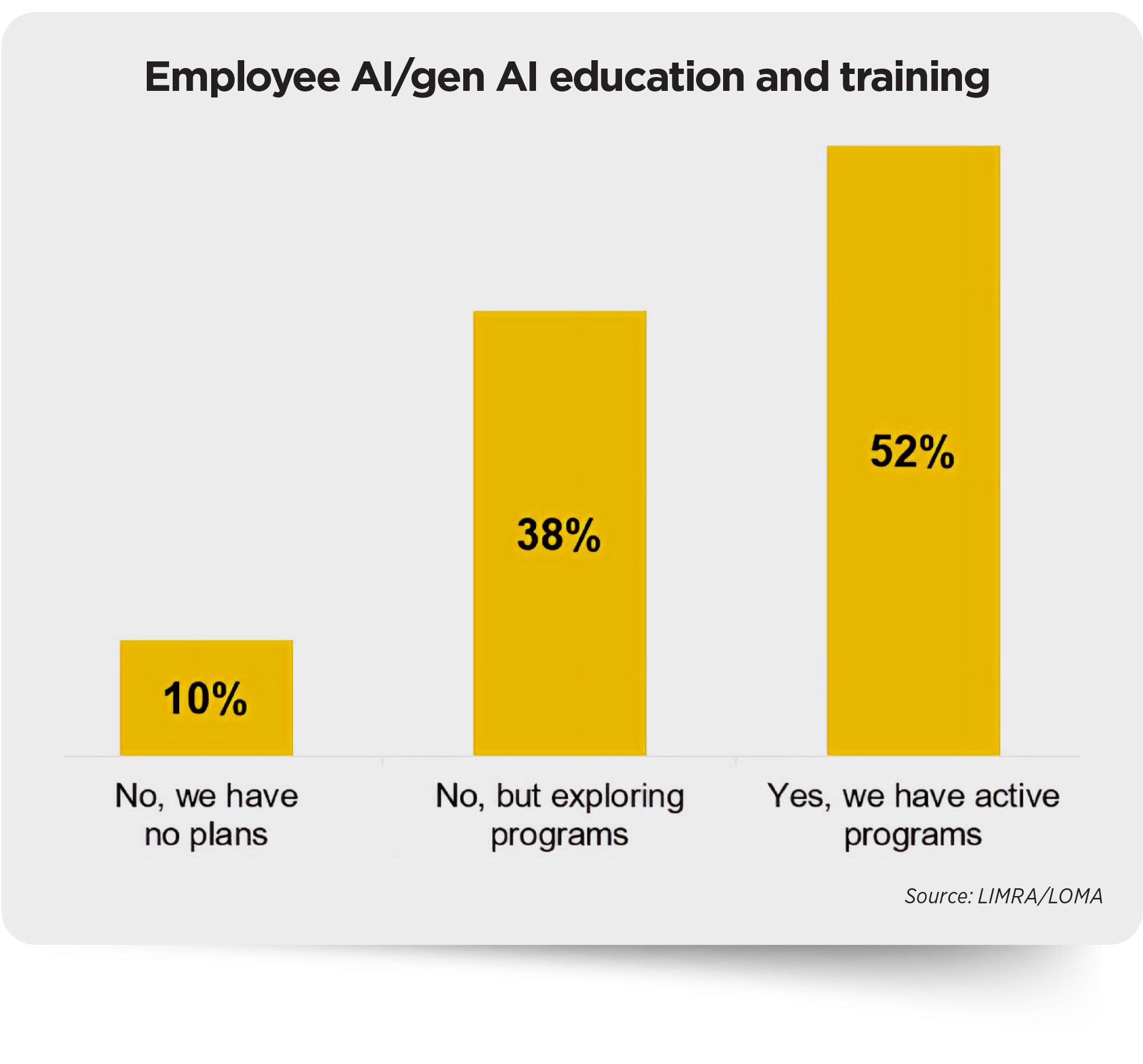

That leads to statistics like this one: 47% of technology executives say AI will have a significant impact on the insurance industry in the next three years, according to a LIMRA survey, but 48% of insurers do not have an AI training program yet.

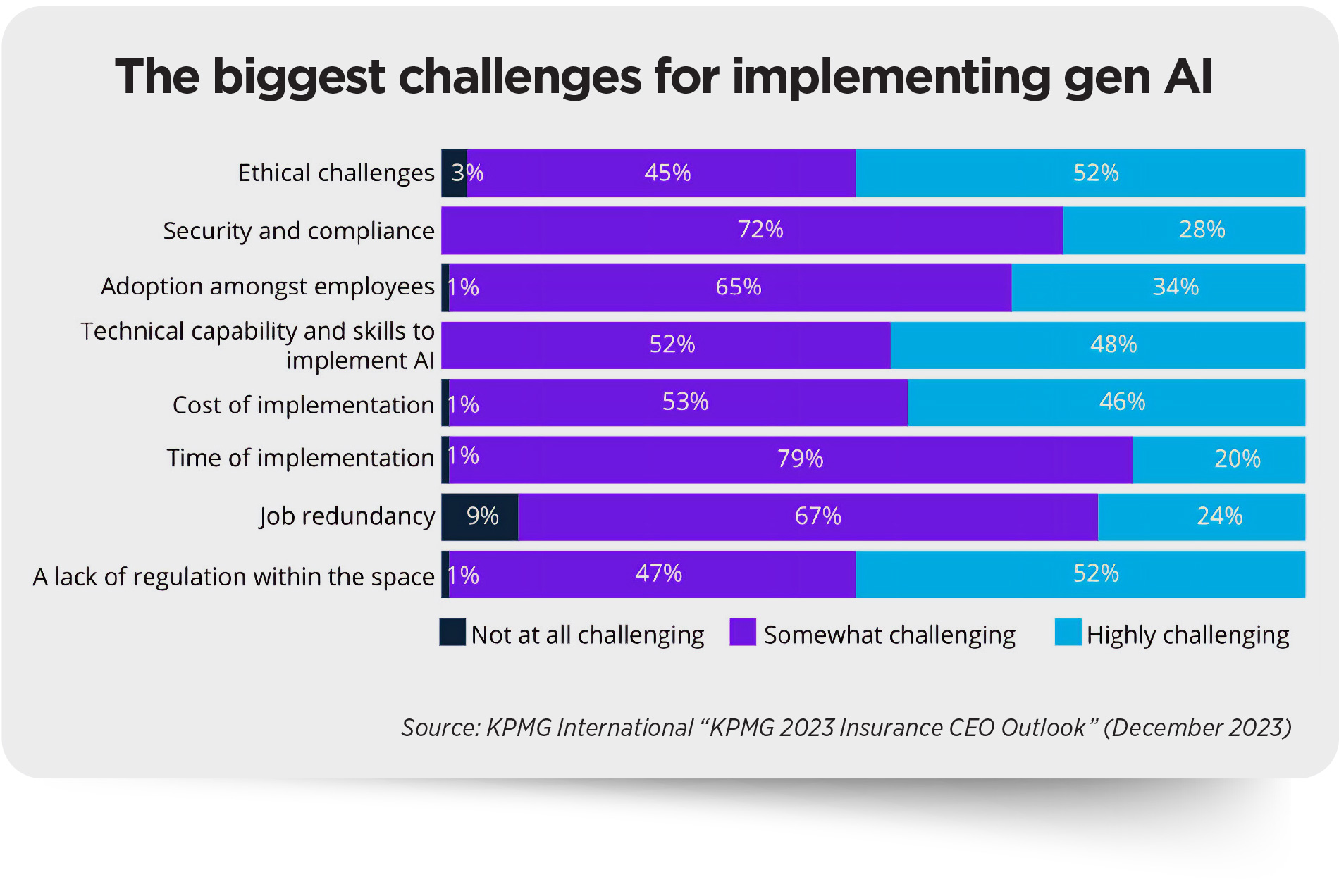

Then there are the obvious concerns over privacy and the unintended consequences of combining AI with mounds of data. Regulators are busy working on rules upon rules for those issues.

Add it up and you get an industry taking halting steps forward with its incorporation of AI strategies — but one that is finding it harder to proceed with any deliberateness in a new AI world that is evolving fast.

Hopes are high.

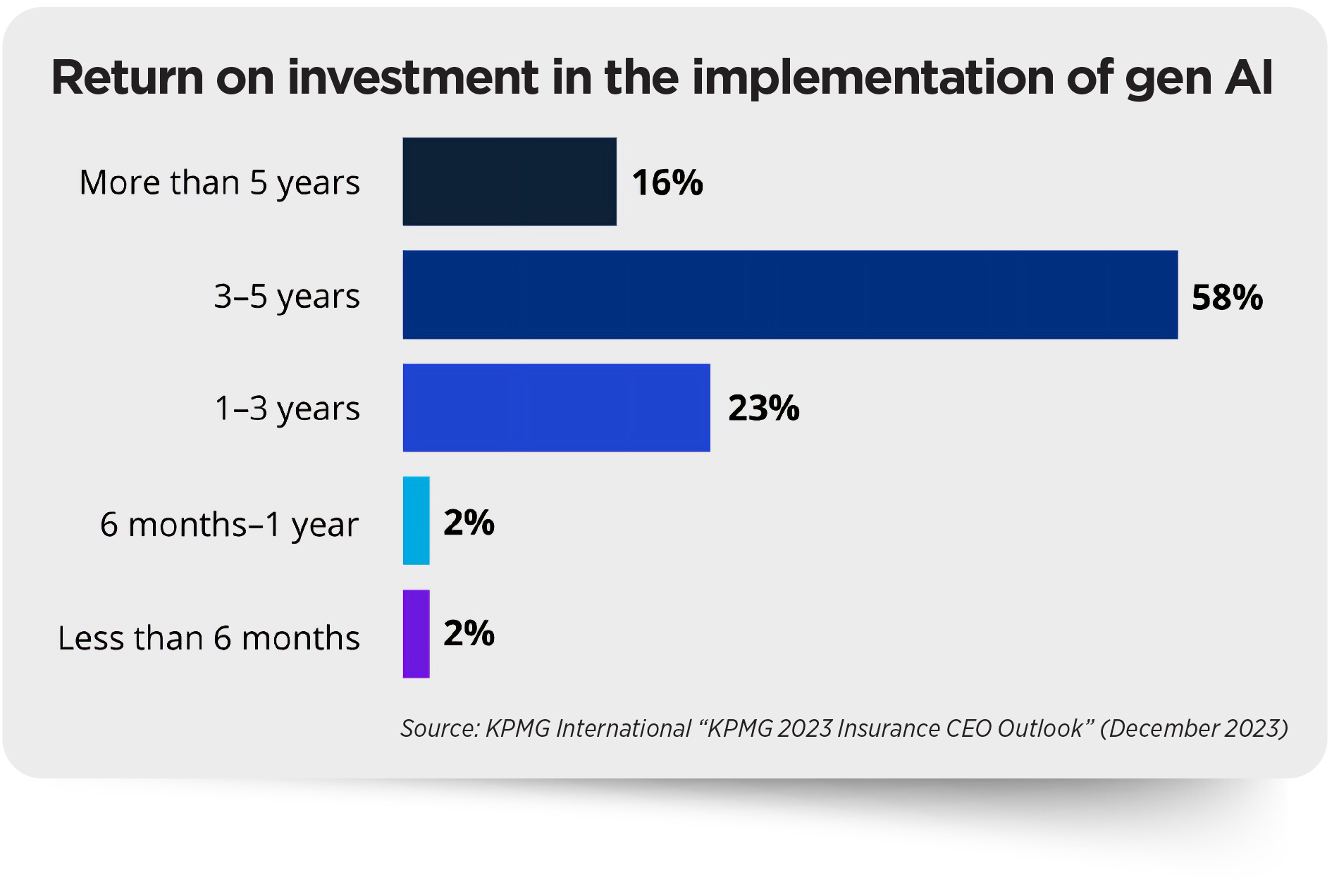

The KPMG 2023 Insurance CEO Outlook highlights a significant degree of trust in AI, with 58% of CEOs in insurance feeling confident about achieving returns on investment within five years.

“Artificial intelligence has the opportunity to be significantly transformative over the next five years,” said Dave Levenson, CEO of LIMRA and LOMA. “I would say that our industry is still moving carefully and cautiously, and of course, that’s going to vary a little bit by company, but for a technology that everybody really believes is going to be transformative, I don’t think we’ve invested as much as perhaps we can and should at this point.”

Widespread uses

Some of the most intriguing integration of AI into insurance processes is happening in the property and casualty world.

Metromile is using telematics and AI as the basis of its pay-per-mile auto insurance concept. The insurer relies on a device installed in the vehicle to collect data on mileage, speed and driving habits. AI algorithms then analyze the collected data and generate a personalized rate for each driver.

Nauto takes AI technology to the next level. The AI-driven vehicle safety system employs a dual-facing camera, computer vision and algorithms to identify hazardous driving situations in real time. The company claims its AI technology is seeing an 80% reduction in collisions.

Jennifer Kyung, vice president of P&C underwriting with USAA, said artificial intelligence is helping to speed up underwriting and gain more insights for underwriters.

In addition, AI can look at aerial images of property and determine whether there is a potential risk, enabling insurers to reach out to their customers about mitigation tactics. AI also can read through call transcripts and categories themes that help insurers with action items to improve their member service.

“It helps us look at broader swaths of data in claims files,” Kyung said during a September Insurance Information Institute webinar. “We look at it as an aid as opposed to a black box.”

Life insurers tread lightly

AI integration on the life and annuity sides of the insurance world is not as dramatic as steering vehicles away from certain accidents. But some forward-thinking insurers are pushing ahead with a commitment to technology.

Insurers are moving forward in five key areas.

Enhanced risk assessment. AI can analyze vast amounts of data to identify risks more accurately, allowing insurers to offer more tailored policies and premiums.

Predictive analytics. By forecasting trends and potential claims, AI can help insurers make informed decisions about underwriting and pricing.

Customer experience. AI-powered chatbots and virtual assistants can provide instant support, answer queries and streamline the claims process, improving overall customer satisfaction.

Claims processing. Automated systems can expedite claims handling, using AI to assess damages through image recognition and process claims more efficiently.

Fraud detection. Machine learning algorithms can detect unusual patterns and flag potentially fraudulent claims, reducing losses for insurers.

Attend any big industry conference and when artificial intelligence comes up, life insurers are quick to mention generative AI. Unlike traditional AI, which often focuses on data analysis, gen AI can create original content — text, images, music and more — based on learned patterns.

Gen AI has the potential to comprehend sentiment, empathize with consumers and respond with more relevant, personalized product offerings. That has the industry excited.

“We’ve seen a lot of interest and activity in the insurance sector on this topic, which is not surprising given that the insurance industry is knowledge-based and involves processing unstructured types of data,” said Cameron Talischi, partner with McKinsey & Co., on a recent podcast. “That is precisely what gen AI models are very good for.”

Yet, despite moving ahead with gen AI test cases and capabilities, many insurance companies are finding themselves “stuck in the pilot phase, unable to scale or extract value,” McKinsey found.

Talischi blames a “misplaced focus on technology” as opposed to what is truly important from a business perspective.

“A lot of time is being spent on testing, analyzing and benchmarking different tools such as LLMs [language learning models] even though the choice of the language model may be dictated by other factors and ultimately has a marginal impact on performance,” he explained.

Likewise, insurers are often not focused on the right test cases that can have the most impact, he added.

“The better approach to driving business value is to reimagine domains and explore all the potential actions within each domain that can collectively drive meaningful change in the way work is accomplished,” Talischi said.

The dark side of AI

AI comes with major potential to save insurers money through efficiency and information analysis. But one misstep could turn into a lawsuit liability award that could wipe out all the savings and then some. That context explains in part why insurers are somewhat hesitant to go all-in with AI before doing their due diligence.

“I think when you deal with new technologies like artificial intelligence, it’s really important that everybody understands what the good things are that technology can do [and] what are the dangerous things that technology can do,” Levenson said.

To that end, LIMRA helped the industry form a committee composed of nearly 80 executives representing over 40 U.S. insurance companies. The LIMRA and LOMA AI Governance Group aims to “create a foundation for sustainable and inclusive AI practices to improve the life insurance industry.”

Its first study, “Navigating the AI Landscape: Current State of the Industry,” was completed earlier this year. It found that 100% of carriers are experimenting with or using AI in some form.

They don’t have to look further than the front pages to see the risks.

State Farm was sued in the Northern District of Illinois over claims that its AI discriminates against Black customers. The class-action suit claims State Farm’s algorithms are biased against African American names.

Plaintiffs cited a study of 800 homeowners and found discrepancies among Black and white homeowners in the way their State Farm claims were handled. Black policyholders faced more delays, for example.

Another class-action lawsuit in California alleges that Cigna used an AI algorithm to screen claims and toss them out without human review. The 2023 lawsuit was preceded by a ProPublica investigation headlined “How Cigna Saves Millions by Having Its Doctors Reject Claims Without Reading Them.”

Training is one way to reduce liability risks, but insurance companies are falling short there as well.

Regulation disparities

The rapid rise of AI and its potential for discrimination and invasion of privacy prompted some state insurance regulators to bypass the National Association of Insurance Commissioners and push through their own laws.

Colorado led the way with a sweeping bill governing the use of AI by the insurance

industry. The law is so thorough in its novel requirements for developers and deployers of high-risk AI systems that progressive Gov. Jared Polis, D-Colo., wrote the Legislature expressing his “reservations.”

Polis urged the Legislature to “fine-tune the provisions and ensure that the final product does not hamper development and expansion of new technologies in Colorado that can improve the lives of individuals” as well as “amend [the] bill” if the federal government does not preempt it “with a needed cohesive federal approach.”

Colorado’s AI regulation requires life insurers to report how they review AI models and use external consumer data and information sources, which includes nontraditional data such as social media posts, shopping habits, internet of things data, biometric data and occupation information that does not have a direct relationship to mortality, among others.

Life insurance companies are also required to develop a governance and risk management framework that includes 13 specific components.

Although Colorado acted first, several other states — including New York and California — are considering AI legislation to restrict how insurers handle personal and public data.

“Really what we worry about when we are building these models is, where is all this data coming from?” said Derek Leben, president of Ethical Algorithms and associate teaching professor of ethics at the Tepper School of Business at Carnegie Mellon University. “Do people have control and ownership and a say over how their data is used? Do we understand how and why models are making these decisions?”

Leben participated in the general session titled: “AI: How Is It Powering the Future?” during the LIMRA Annual Conference in September.

Leben told a LIMRA audience that “the regulations we need are already on the books” about topics such as product safety liability and discrimination.

NAIC tackles AI

NAIC regulators are trying to keep up with AI, but work is going slowly.

The executive committee and plenary adopted the Model Bulletin on the Use of Algorithms, Predictive Models, and Artificial Intelligence Systems by Insurers in December after a deliberate process.

The bulletin is not a model law or a regulation. It is intended to “guide insurers to employ AI consistent with existing market conduct, corporate governance, and unfair and deceptive trade practice laws,” the law firm Locke Lord explained.

Some consumer advocates were disappointed by the mild language in the bulletin.

“We believe the process-oriented guidance presented in the bulletin will do nothing to enhance regulators’ oversight of insurers’ use of AI Systems or the ability to identify and stop unfair discrimination resulting from these AI Systems,” wrote Birny Birnbaum, executive director of the Center for Economic Justice.

When this issue went to press, 17 states had adopted the bulletin and an additional four states “have adopted related activity,” an NAIC spokesperson said.

In addition, regulators are surveying segments of the industry to gauge its use of AI. Fifty-eight percent of life insurers are either using or have an interest in using artificial intelligence in their businesses, an NAIC working group found.

The 58% figure is well below the use of AI or the desire to use the technology expressed during earlier surveys by home (70%) and auto (88%) insurers.

Third-party vendors are developing a lot of the AI and machine learning technology that is proliferating in the insurance industry. That creates a lack of control for insurers and regulators. NAIC regulators were concerned enough to establish a 2024 task force devoted to regulation of third-party systems.

The Third-Party Data and Models Task Force began meeting in March. It is charged with:

» Developing and proposing a framework for the regulatory oversight of third-party data and predictive models; and

» Monitoring and reporting on state, federal, and international activities related to governmental oversight and regulation of third-party data and model vendors and their products and services.

Regulators all but confirmed that it will be difficult to keep pace with the evolving power of AI.

“I feel like the industry is moving very fast in this space,” Vermont Insurance Commissioner Kevin Gaffney said during a work session on the topic. “We are trying to keep up. It would be nice to say we could stay ahead, but I think the realistic vision right now is to make sure that we’re still in the rearview mirror of industry.”

InsuranceNewsNet Senior Editor John Hilton has covered business and other beats in more than 20 years of daily journalism. John may be reached at [email protected]. Follow him on Twitter @INNJohnH.

Athene announces CFO Martin P. Klein to retire

ICHRAs: A choice for small employers

Advisor News

- DOL proposes new independent contractor rule; industry is ‘encouraged’

- Trump proposes retirement savings plan for Americans without one

- Millennials seek trusted financial advice as they build and inherit wealth

- NAIFA: Financial professionals are essential to the success of Trump Accounts

- Changes, personalization impacting retirement plans for 2026

More Advisor NewsAnnuity News

- F&G joins Voya’s annuity platform

- Regulators ponder how to tamp down annuity illustrations as high as 27%

- Annual annuity reviews: leverage them to keep clients engaged

- Symetra Enhances Fixed Indexed Annuities, Introduces New Franklin Large Cap Value 15% ER Index

- Ancient Financial Launches as a Strategic Asset Management and Reinsurance Holding Company, Announces Agreement to Acquire F&G Life Re Ltd.

More Annuity NewsHealth/Employee Benefits News

- Queensbury details exemptions to lower property tax

- Expanded Affordable Care Act subsidies – now expired – drove major increases in marketplace health insurance enrollment across key groups: Johns Hopkins Bloomberg School of Public Health

- New Insurance Study Findings Have Been Reported from University of South Carolina (Brokering a new path: navigating administrative burdens in the health insurance Marketplaces): Insurance

- Medicaid disenrollment spikes at age 19, study finds: University of Chicago

- How might carriers respond to drop in ACA enrollment?

More Health/Employee Benefits NewsLife Insurance News